Problem Statement

Design a food rating system that can do the following:

- Modify the rating of a food item listed in the system.

- Return the highest-rated food item for a type of cuisine in the system.

Implement the FoodRatings class:

FoodRatings(String[] foods, String[] cuisines, int[] ratings)Initializes the system. The food items are described byfoods,cuisinesandratings, all of which have a length ofn.foods[i]is the name of theithfood,cuisines[i]is the type of cuisine of theithfood, andratings[i]is the initial rating of theithfood.

void changeRating(String food, int newRating)Changes the rating of the food item with the namefood.String highestRated(String cuisine)Returns the name of the food item that has the highest rating for the given type ofcuisine. If there is a tie, return the item with the lexicographically smaller name.

Note that a string x is lexicographically smaller than string y if x comes before y in dictionary order, that is, either x is a prefix of y, or if i is the first position such that x[i] != y[i], then x[i] comes before y[i] in alphabetic order.

Example 1:

Input

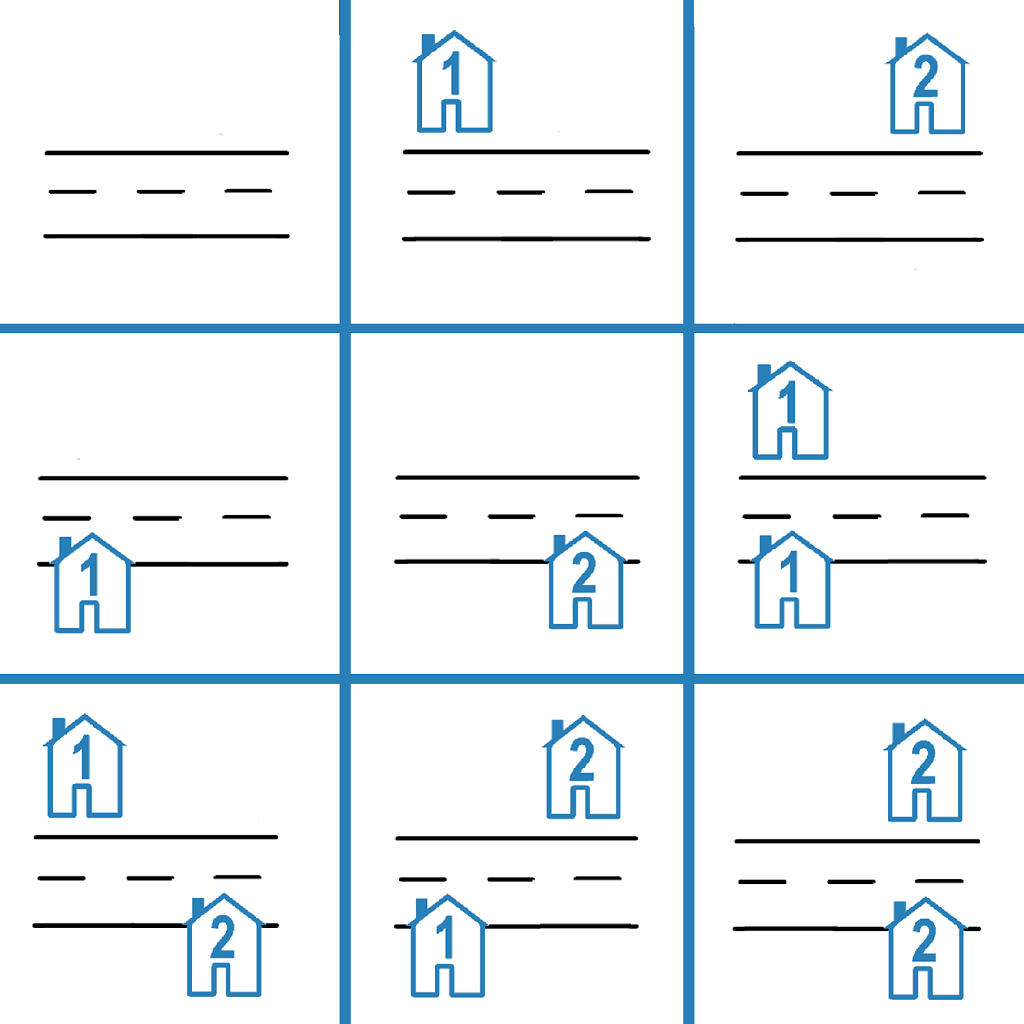

["FoodRatings", "highestRated", "highestRated", "changeRating", "highestRated", "changeRating", "highestRated"]

[[["kimchi", "miso", "sushi", "moussaka", "ramen", "bulgogi"], ["korean", "japanese", "japanese", "greek", "japanese", "korean"], [9, 12, 8, 15, 14, 7]], ["korean"], ["japanese"], ["sushi", 16], ["japanese"], ["ramen", 16], ["japanese"]]

Output

[null, "kimchi", "ramen", null, "sushi", null, "ramen"]

Explanation

FoodRatings foodRatings = new FoodRatings(["kimchi", "miso", "sushi", "moussaka", "ramen", "bulgogi"], ["korean", "japanese", "japanese", "greek", "japanese", "korean"], [9, 12, 8, 15, 14, 7]);

foodRatings.highestRated("korean"); // return "kimchi"

// "kimchi" is the highest rated korean food with a rating of 9.

foodRatings.highestRated("japanese"); // return "ramen"

// "ramen" is the highest rated japanese food with a rating of 14.

foodRatings.changeRating("sushi", 16); // "sushi" now has a rating of 16.

foodRatings.highestRated("japanese"); // return "sushi"

// "sushi" is the highest rated japanese food with a rating of 16.

foodRatings.changeRating("ramen", 16); // "ramen" now has a rating of 16.

foodRatings.highestRated("japanese"); // return "ramen"

// Both "sushi" and "ramen" have a rating of 16.

// However, "ramen" is lexicographically smaller than "sushi".

Constraints:

1 <= n <= 2 * 104n == foods.length == cuisines.length == ratings.length1 <= foods[i].length, cuisines[i].length <= 10foods[i],cuisines[i]consist of lowercase English letters.1 <= ratings[i] <= 108- All the strings in

foodsare distinct. foodwill be the name of a food item in the system across all calls tochangeRating.cuisinewill be a type of cuisine of at least one food item in the system across all calls tohighestRated.- At most

2 * 104calls in total will be made tochangeRatingandhighestRated.

Video Tutorial

You can find the detailed video tutorial hereThought Process

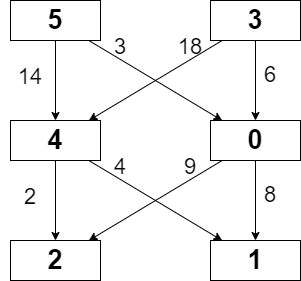

If we want to optimize highestRated API call (which we should), a max heap would help achieve it with O(1). Some details about implementation.

- Make each food into a class would make code clean, also maintain the order when insert/remove from the max heap through Comparable interface.

- Make sure we have O(1) access to each Food object by maintaining two HashMaps.

- foodIndex, Map<String, Food> -> Key: food name, Value: Food object

- CuisineIndex, Map<String, Queue<Food>>, Key: cuisine name, Value: Max heap on rating

- Follow up thought: if this is a system design question, how would you actually do it? Some read on how Youtube calculates total views.